Netflix, StichFix, and Why We Love Outcomes Data

If you know anything about AdeptID, you probably know one of us (Fernando or Brian) personally.

If you know a bit more, you might also know that we’re using machine learning to make job transitions easier for Americans without college degrees (and for the employers who want to hire them).

We started AdeptID because we see a matching problem between talent and demand that looks a lot like matching problems that have been solved by data-driven ventures in other sectors.

That’s why we set out to build a recommendation engine that matches talent with attractive jobs.

There are two recommendation engines that are particularly inspirational to us:

-

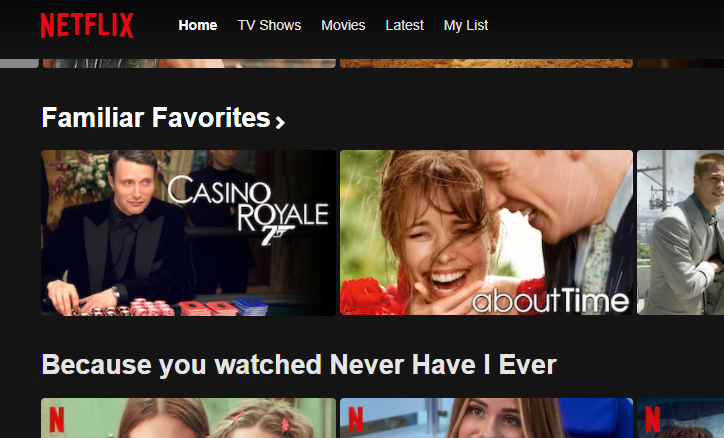

Netflix uses your viewing history and the observations of millions of people watching their content to predict what you’ll want to watch and then present it to you (they even have a % match similar to our AdeptID score)

-

StitchFix uses your expressed clothing preferences and the demonstrated purchasing preferences of thousands of other users to predict and then present the items you are likely to keep from one of their style boxes

Crucially, these models don’t just run on what users say they like, they take into account what users do – what shows they watch, what clothes they buy – in other words, the outcomes. I may have told Netflix I will eventually watch Frost/Nixon, but the models know how many times I’ve rewatched Casino Royale instead. Without the outcomes data, both of these companies’ predictions would be inaccurate and less useful to their users.

We believe that one of the reasons why existing efforts to match talent to demand (and talent to training) are so unsuccessful is that they do not take this outcomes data into account.

Our priority is to collect and learn from outcomes data: Who is getting hired? Who is not getting hired? Are they still working in the same role two years later? Have they been promoted?

Having this data allows us to look at individual’s work histories and potential target jobs, infer a set of latent skills and attributes, then generate predicted success of placement and recommended training interventions based on the real results of others like them.

There are plenty of impressive, well-intentioned efforts to manually create and tag skills to jobs in the form of skills taxonomies. However, these efforts won’t surface actionable insights until we establish their relationship to real hiring & labor market results. Ingesting both outcomes and skills data allows us to answer the “So what?” questions that employers and training providers will inevitably ask: “Which candidates are most likely to succeed? Which new skills would make the most impact?”

Understandably, this outcomes data is incredibly sensitive to employers and training providers, which means that we need to meet very high bars of security and privacy. Furthermore, organizations’ people data is typically stored in headache-inducing HRIS systems, which Brian has mastered in order to make our product easier to use.

These investments are worth it. Models trained on outcomes data present tremendous new value to the original holders of the data themselves. The insights we can provide employers over time can help them hire better and more inclusively. The recommendations we offer training providers allow them to dramatically improve their placement. Outcomes data is the key that unlocks these capabilities.

If you’ve made it this far, you’ve probably picked up on how much we care about this subject. We hope you can add our obsession with outcomes data to the list of things you know about AdeptID.

If you have outcomes data and want to improve your hiring pipeline (or you just want to talk about it) please reach out.